Introduction To Open AI Vector Embeddings

Do you know what vector embeddings are and why they are useful? In a previous post, we talked about these concepts. In this post, we'll show you how to generate embeddings and do cool things with them using OpenAI.

Let's get started!

Why use Open AI vector embeddings?

- Open AI is the company behind ChatGPT and they offer vector embeddings as a service

- Open AI's GPT models are best in class and trained on vast amounts of data. As I mentioned in the previous post, the quality of vector embeddings depends on the model. Better model = Better embeddings

- The Open AI embeddings API is super easy to use and has a negligible cost

Prerequisites:

Here's what you'll need before you can start coding:

- An OpenAI developer account (They provide free credits for the first 3 months)

Step 1:

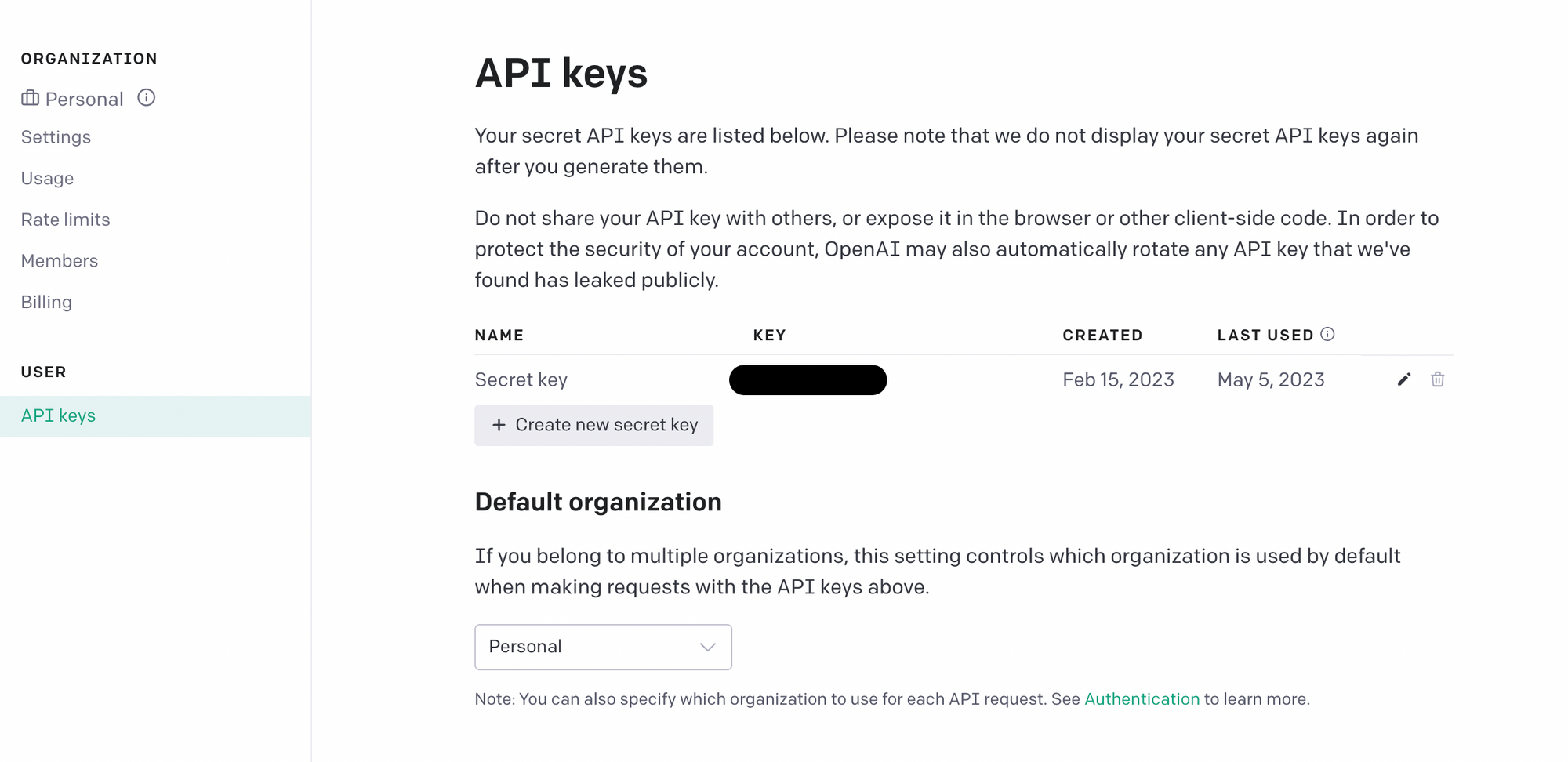

Create an API key in the OpenAI developer account. Just click, Create new secret key and save the API key to a safe location as you will not be able to see it again.

Step 2:

Install the Open AI python package

pip install openaiStep 3:

Import package and set up API key variable

import openai

openai.api_key = "YOUR-OPENAI-API-KEY"Step 4: Creating a vector embedding

Creating a vector embedding using OpenAI's python SDK is pretty easy

text = "This is an test sentence"

embedding = openai.Embedding.create(input=[text], model="text-embedding-ada-002")['data'][0]['embedding']Here we are calling the create method of the embedding API with the text as our input. The model parameter allows us to pick which OpenAI model we want to use to create our embeddings. The text-embedding-ada-002 is the best embedding model currently offered, so we'll go with that.

If you print out the variable embedding, you should see something like this:

[0.005899297539144754, -0.006522643845528364, 0.004337718710303307, -0.0038621763233095407, -0.020242687314748764, 0.021862102672457695, -0.0068889399990439415, ...]These numbers don't mean much to us, but this is how the model represents text data.

Alright, onto the fun stuff!

Of course, having a single embedding isn't going to do much for us. We need to create multiple embeddings if we want to compare them to one another. We can do this by creating a pandas dataframe to store the text and their respective embeddings, so we can keep track of our data.

For example, we can use some sample text taken from a history textbook.

texts = [

"In June 1775, the city of New York faced a perplexing dilemma. Word arrived that George Washington, who had just been named commander in chief of the newly formed Continental army, was coming to town. But on the same day, William Tryon, the colony’s crown-appointed governor, was scheduled to return from Britain.",

"The outcome of the Great War for Empire left Great Britain the undis- puted master of eastern North America. But that success pointed the way to catastro- phe. Convinced of the need to reform the empire and tighten its administration, British policymakers imposed a series of new administrative measures on the colonies.",

"More important, col- onists raised constitutional objections to the Sugar Act. In Massachusetts, the leader of the assembly argued that the new legislation was contrary to a fundamental Principall of our Constitution: That all Taxes ought to originate with the people.",

"Jefferson and the Republicans promoted a west- ward movement that transformed the agricultural economy and sparked new wars with Indian peoples. Expansion westward also shaped American diplomatic and military policy, leading to the Louisiana Purchase, the War of 1812, and the treaties negotiated by John Quincy Adams.",

"Merchants John Jacob Astor and Robert Oliver became the nation’s first mil- lionaires. After working for an Irish-owned linen firm in Baltimore, Oliver struck out on his own, achieving affluence by trading West Indian sugar and coffee."

]df = pd.DataFrame(data=texts, columns=['text'])

vector_embeddings = []

for index, row in df.iterrows():

text = row.text

embedding = openai.Embedding.create(input=[text], model="text-embedding-ada-002")['data'][0]['embedding']

vector_embeddings.append(embedding)

df['embeddings'] = vector_embeddings

df.to_csv("sample_text_with_embeddings.csv", index=False)Great! If you take a look at the saved CSV file you'll see the text and the vector embedding for that text.

Comparing Embeddings Using Cosine Similarity

Now, let's talk about how we can compare embeddings using cosine similarity. Cosine similarity gets the distance between two vectors and represents it as a single number. We can use this technique to see how similar one piece of text is to another piece of text.

Lets compare the first two sentence in the dataframe

import json

from openai.embeddings_utils import cosine_similarity

df = pd.read_csv("sample_text_with_embeddings.csv")

text1_embedding = json.loads(df.iloc[0]['embeddings'])

text2_embedding = json.loads(df.iloc[1]['embeddings'])

cosine_sim = cosine_similarity(text1_embedding, text2_embedding)

If we compare the first two sentences in our dataframe, we'll get a cosine similarity of 0.8137. These two pieces of text are pretty similar because they are pulled from the same paragraph. But if we check the cosine distance between the first and last piece of text, the similarity will be smaller because they are less relevant to each other.

Thinking Bigger!

The example above just shows you how to check how similar two pieces of text are. In this case we only have 5 pieces of text, so you can manually check the distances between them.

But suppose, I had an entire chapter full of text from a history textbook. How would you handle large amounts of data and what would you be able to do with it?

Well, we can use vector similarity to find the closest matching pieces of text. For example, we can search for particular information in the textbook and find the pieces of text that are most similar to our query.

I won't extract text from the whole chapter, but we can use the sample text we used previously to understand how this works.

df = pd.read_csv("sample_text_with_embeddings.csv")

question = "What did westward expansion lead to?"

question_vector = openai.Embedding.create(input=[question], model="text-embedding-ada-002")['data'][0]['embedding']

df['similarities'] = df['embeddings'].apply(lambda x: cosine_similarity(json.loads(x), question_vector))

df = df.sort_values("similarities", ascending=False)

print(df.head())text ... similarities

3 Jefferson and the Republicans promoted a west-... ... 0.854431

1 The outcome of the Great War for Empire left G... ... 0.817069

0 In June 1775, the city of New York faced a per... ... 0.771593

4 Merchants John Jacob Astor and Robert Oliver b... ... 0.769621

2 More important, col- onists raised constitutio... ... 0.760737Printing the dataframe, you can see which pieces of text are most similar to the query query.

What's next? -> Vector Databases

Using dataframes to store our data will not work very well when we have large amounts of text. Calling the cosine_similarity function for each row of data is not ideal if we want to quickly get our answers. This is where vector databases come in. Vector databases make it much easier to store and search for unstructured data. I'll be writing a post about it soon, so be sure to subscribe if you don't want to miss it.

Conclusion

In this post, we've shown you how to generate embeddings using OpenAI's Python SDK, how to compare embeddings using cosine similarity, and how to build a primitive version of semantic search using vector similarity to analyze large amounts of data. So go ahead and try it out!

Member discussion